Artificial Intelligence (AI) has been hailed as a revolutionary technology – one that will reshape industries, economies, and our way of life. But as with every major technological shift, the question remains: How much of this is real innovation, and how much is just hype?

To answer that, we need to take a step back and examine AI’s history, its potential, and the real-world challenges that might limit its impact.

Setting the Stage

AI is on everyone’s lips. From business leaders to policymakers, there’s a sense that AI represents a significant inflection point. But if we look at history, AI has gone through multiple hype cycles, with periods of intense enthusiasm followed by deep disillusionment.

I’ve spent years working in innovation—watching trends rise, fall, and sometimes rise again. The research and development of a promising technology is very different from the commercial exploitation of that technology. AI is no different, and we must view it through a critical, evidence-based lens. Like other technologies, I’d argue that its true value depends on:

- how it is applied in practical contexts,

- how accessible it is, and

- whether businesses (and individuals) are willing to pay for it.

A (Very) Brief History of AI: The Boom-and-Bust Cycle

Understanding AI’s past helps us predict where it might go.

AI has long been a topic of intrigue, dating back to Alan Turing’s 1950 paper “Computing Machinery and Intelligence,” where he introduced the concept of the imitation game (now known as ‘the Turing Test’). The fundamental question was: Can machines think?

AI development can trace its roots back to 1956, when there was a concerted effort to tackle intelligence as an engineering problem. Researchers believed they could crack AI’s core challenges quickly, and clear research directions were set.

However, the field quickly hit challenges that at the time, appeared to be very difficult to address with the available technologies, leading to the first AI winter. But this downturn exposed the challenges that needed to be solved. This eventually gave rise to expert systems in the 1980s, sparking AI’s first commercial boom.

The cycle repeated:

- More research → New problems uncovered → Next set of solutions sought.

- AI research began embracing probability and statistical methods, realising that intelligence is impressionistic and uncertain, not necessarily always rule-based.

- This led to the second pit of despair as expert systems fell out of favour.

Then, AI found a light at the end of the tunnel—with the rise of deep learning and large-scale neural networks initially addressing challenges with image recognition in late 2000s and early 2010s. Engineers refined the problem further, culminating in the 2017 paper “Attention Is All You Need,” which introduced the Transformer architecture.

From this breakthrough, we saw the rapid ascent of ChatGPT-3 in 2020, which amazed the world. Soon after, Sam Altman (CEO of OpenAI) was riding in a very expensive car—a fitting metaphor for AI’s resurgence.

We are all likely to agree that we’re in another boom period, with generative AI dominating discussions. But history suggests that we should tread carefully—are we heading for another trough of despair, or will AI finally break free from this cycle?

The Current AI Landscape: What’s Happening Now?

Where Are We on the Gartner Hype Cycle?

If we look at the Gartner Hype Cycle, AI is firmly in the “Peak of Inflated Expectations”. According to their graph, we should be heading into the “Trough of Disillusionment”, but this is where wild claims about AI’s potential proliferate, often outpacing reality.

Major AI developments continue at a breakneck pace. Here are just some recent announcements that illustrate the speed:

- Dec 2024: OpenAI announces GPT-4o3 and its latest reasoning model.

- Jan 2025: The UK Government releases an AI Opportunities Action Plan.

- Jan 2025: China’s DeepSeek R1 makes waves in the AI race.

- Jan 2025: A $500 billion AI initiative is launched in the U.S.

The danger? Hitting the inevitable “Trough of Disillusionment” when people realise AI has limitations.

From my point of view, I disagree with Gartner, I do not think GenAI is leaving the peak of expectations for the “trough”. I still think it is being pumped up. When you have folks talking of AI achieving consciousness, you have to be a little ahead of yourself, I would think.

Just because you wish it to be true (the hype) doesn’t make it true (the reality), and it certainly doesn’t make a technology a success.

A quick side-ways step… Deepseek

Now, let’s turn our attention to one of the most significant recent events in AI: the DeepSeek announcement. While there may be debate over the veracity of its claims and the timing of the announcement, the implications are noteworthy since, at its core, DeepSeek represents a major perturbation in what we have come to understand to be the ‘accepted norms’ of the AI ecosystem.

- Producing capable large language models is very, very expensive – DeepSeek claim to have produced their model at a fraction of the cost of its competitors

- Closed source models are more capable than open source – Deepseek is (seemingly) capable and it is an Open-Source offering

- Small companies can’t compete with the vast monies and computing resources of the AI behemoths – Deepseek is a small company – its product represents healthy competition, forcing other AI companies to innovate and become more cost-efficient.

Earlier, I introduced a three-legged stool framework for technology to become a commercial success:

- A real problem to solve

- A willingness to pay for the solution

- Accessibility (ease of use)

DeepSeek’s approach directly addresses two of these factors:

- It reduces cost pressures (easing the issue of willingness to pay).

- It makes AI more accessible by lowering entry barriers.

DeepSeek’s announcement also raises fundamental industry questions:

- Which ecosystem model will prevail – Closed Source AI (proprietary) or Open-Source AI (publicly accessible & modifiable)?

- What level of AI performance is “good enough” to drive widespread adoption?

If lower costs and open-source availability truly drive higher adoption, then the AI industry may be on the brink of an accelerated transformation. If not, the barriers to real-world AI integration remain formidable.

There’s a historical pattern known as Jevons Paradox, which suggests that as technology improves efficiency, consumption increases, negating the gains.

AI may boost productivity, but will we see real economic benefits, or will we just find more ways to waste time? Email and instant messaging were meant to improve communication efficiency, yet they often lead to information overload and reduced deep work.

This ties into Jevons Paradox:

- If AI costs decrease, does adoption increase proportionally?

- If yes → Happy Days! AI’s influence will expand significantly.

- If no → Oh well… the industry still faces barriers to adoption, despite lower costs.

If AI follows a similar pattern, we need to rethink how we measure productivity improvements.

Generative AI: What Problem Is It Actually Solving?

One of the biggest challenges in AI is defining its purpose.

I asked GPT-4 to explain what Generative AI is for, and it responded:

“ In essence, the purpose of generative AI is to expand the capabilities of AI from analytical functions to creative and generative ones, opening new avenues for innovation, automation, and personalisation across various domains.”

This sounds impressive but…what does it actually mean? It did not say anything about what problems it was meant to solve. Which is not that surprising, after all, we have the problems and it has the solutions, right?

So, what problems do we have that need solving?

There are several possibilities but getting it to do your child’s homework is probably not the one that’s really going to ‘move the needle’. If it is, then we have completely missed the point.

So, if not homework, then… improve productivity! But how?

So, I consulted a member of the younger generation (my son) to see if he could explain it to me – since it is his generation that will be the beneficiary of this technology once its matured.

His approach was to draw a pie chart and break it into segments of activities that I commonly do (my son knows me too well).

The pie chart for the working day included writing and replying to email, making calls, reading papers, designing solutions, writing code, re-writing code, debugging the re-written code, and thinking.

He then pointed out that I really procrastinate with emails, and GenAI could really help me with that, saving time there that could usefully be employed elsewhere.

“Dad – you will become more efficient”, he stated.

Genius! I thought. Right, there is a plausible argument of productivity.

Productivity is a problem that does need solving… but is it a big enough problem?

The Elephant in the Room: AI & Productivity in the UK

The UK has long struggled with low productivity growth, attributed to:

- An aging population and workforce shortages.

- A growing skills gap, particularly in technical fields.

- Underinvestment in technology and innovation.

The promise of Generative AI is that it can help automate tasks, reduce costs, and make businesses more efficient. But can it close the productivity gap?

The Problem of Data: Who Owns the AI Goldmine?

The Problem of Data: Who Owns the AI Goldmine?

AI runs on data. The most valuable AI applications rely not just on public data, but also on proprietary enterprise data.

In modern enterprises, private data is often the most valuable asset. But how AI models interact with data varies significantly:

- Top Left: A general AI model trained on public data

- Bottom Left: A general AI model fine-tuned with specialised data, making it an expert in a particular field (e.g., medicine, physics).

- Top Right: A model using enterprise-specific private data to enhance its capabilities while operating within the same cloud where the data is stored.

- Bottom Right: A more complex scenario where data must be moved between clouds, adding costs, security risks, and logistical challenges.

Businesses are increasingly reliant on a single IT provider for their AI and cloud services. While this may offer convenience, it also introduces significant risks. Placing too much trust in one AI or cloud vendor exposes enterprises to potential supplier failure, exploitation, and monopoly pricing.

A further complication arises when businesses move data between different cloud providers. High data transfer fees can make frequent AI retraining prohibitively expensive. This financial burden discourages companies from switching.

A growing concern in AI development is who truly owns and controls the data used in training AI models. When AI is offered as a Software as a Service (SaaS), the lines of ownership become unclear – does the enterprise retain control, or can the AI vendor claim ownership over the model trained on the enterprise’s data?

Adding to this complexity, data sovereignty regulations are becoming more stringent. Many governments now require data to remain within national or regional borders, as seen with EU laws that mandate data residency within Europe. These regulatory constraints limit how AI can be deployed across different regions, further complicating business strategies.

And then there is the timeless challenge: Garbage In, Garbage Out. Even the most advanced AI models are only as good as the data they are trained on. Poor-quality data leads to poor-quality AI outputs. No matter how powerful an AI system is, if it learns from biased, incomplete, or inaccurate data, its predictions and insights will be unreliable.

While AI holds immense potential, practical business issues such as cost, security, vendor dependence, and data sovereignty may slow its adoption and effectiveness. And these challenges don’t even touch on the deeper technical hurdles that lie ahead.

Scaling AI: Does Bigger Mean Better?

If AI is to truly improve productivity, it must go beyond simple automation and enhance the efficiency of enterprises in a meaningful way. At its core, this means leveraging data to drive better decision-making and reasoning.

In its most basic form, AI systems already interact with enterprise data – chatbots, for example, process information to answer questions. But true productivity gains require something deeper: AI systems that can reason over complex, disparate datasets to provide actionable insights. This is where Generative AI must evolve to become more than just a tool for generating text and to be able to support strategic business decision-making.

The Need for AI Reasoning and the Challenge of Emergent Behaviours

To make enterprises more efficient, AI needs to be capable of undertaking advanced reasoning like people can. But reasoning is not something that can simply be programmed – it is an observed emergent property of AI models as they scale.

Consider how human intelligence develops (grossly simplified):

- Babies are born with approximately 86 billion neurons.

- Over childhood and adolescence, humans undergo about 18 years of reinforcement learning.

- The result is a wide spectrum of intelligence, abilities, and reasoning skills

Similarly, AI models improve as they grow in complexity. As the number of parameters in a neural network increase, certain cognitive-like behaviours start to emerge.

The graphs above illustrate this phenomenon:

- The larger the AI model, the better it performs in various tasks.

- At certain scale thresholds, entirely new behaviours emerge – both desirable and undesirable.

When it comes to AI for productivity, enterprises must ask themselves:

- Do we need an AI that can write poetry in the style of Shakespeare?

- Or do we need an AI that can effectively reason over diverse datasets with minimal errors?

The answer depends entirely on the type of business. AI’s utility is not just about intelligence – it is about having the right intelligence for the job.

Balancing AI Scale, Performance, and Cost

If reasoning is an emergent property of AI, then businesses must focus on achieving the right scale where reasoning emerges without unnecessary complexity or cost. This raises several practical considerations:

- How do we measure and guarantee the quality of emergent reasoning behaviours?

- What level of reasoning is “good enough” for practical enterprise use?

- What cost implications come with scaling AI models for improved reasoning?

Simply put, there is no value in deploying an AI tool that does not align with an enterprise’s specific needs. If AI reasoning is to be a real driver of productivity, organisations must carefully assess the costs versus the benefits.

Balancing Cost, Efficiency, and AI Implementation Choices

At its core, the discussion around AI in enterprises often comes down to cost. Like any technology, AI has multiple possible implementations, each with its own advantages and disadvantages.

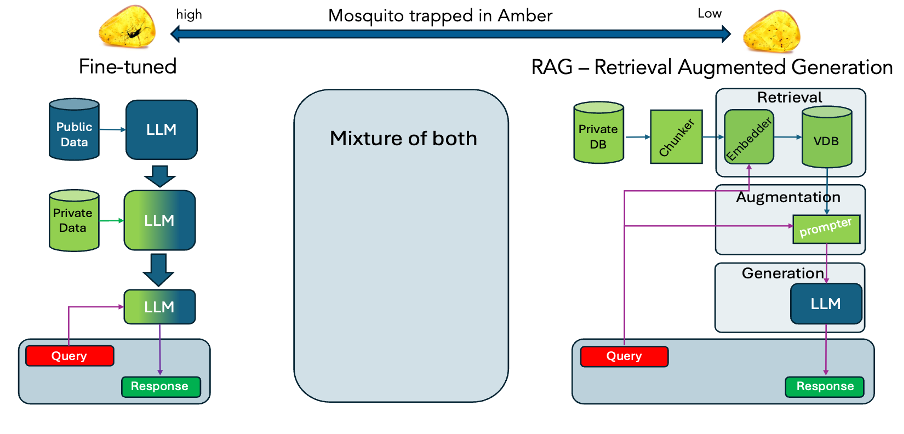

This diagram illustrates the spectrum of AI solutions available:

- Left – more expensive, fine-tuned AI models that require frequent retraining.

- Right – less expensive Retrieval-Augmented Generation (RAG)-based solutions, which rely on retrieving existing data rather than retraining models.

- In the centre – Hybrid approaches that combine elements of both.

Each approach has implications for data ownership, cost, and enterprise fit:

- Fine-tuned models (left side) offer deep customisation but come with higher costs due to frequent retraining.

- RAG-based solutions (right side) minimise retraining costs and eliminate ownership concerns but may be less optimised for specific enterprise needs.

- Hybrid solutions (middle), including agentic AI approaches, attempt to strike a balance between cost and efficiency.

The choice between these approaches depends on an enterprise’s:

- Budget constraints – How much is AI adoption worth to them?

- Access to private data – Does AI need to process proprietary information?

- Risk tolerance – How much control do they need over their data and AI behaviour?

- Market fit – Does a highly specialised model offer enough ROI, or is a general solution sufficient?

Ultimately, the best commercial AI solution will likely be a combination of fine-tuning and RAG, balancing cost-effectiveness with the need for tailored intelligence.

And if AI sufficiently improves efficiency at a reasonable cost, then the prognosis remains positive—after all, humans have always been willing to pay for convenience and efficiency.

Final Thoughts

Throughout this discussion, we have explored the key elements required for the successful commercialisation of any technology. For AI, specifically Generative AI (GenAI), to achieve widespread adoption, three fundamental criteria must be met:

- A problem to solve. Clearly, AI has potential applications in multiple industries, from automation to knowledge management.

- A willingness to pay. This is less certain—it depends on whether businesses see AI as delivering real economic value.

- Accessibility to the solution. This is likely to improve over time as AI becomes more refined and easier to integrate into workflows.

However, accessibility and willingness to pay may be influenced by secondary effects, such as data privacy concerns, business resilience, and regulatory compliance.

GenAI is undeniably impressive, and it will continue to evolve and improve. However, there remains a lingering question: Is AI a transformative force, or is it still a technology searching for a problem to solve?

The AI industry is currently riding a wave of hype, but history warns us to be cautious. We have seen this before—most notably with expert systems in the 1980s, which were initially hailed as groundbreaking but ultimately failed to meet all the expectations of AI.

AI has already been deployed with mixed success and occasional frustration. Some examples illustrate both its potential and its risks:

- Air Canada (2022): A chatbot unintentionally set company policy on the fly, leading to confusion and legal liabilities for Air Canada

- The AI Lawyer (2023): A lawyer used GenAI to find case law, but the AI hallucinated legal references, resulting in the lawyer being sanctioned.

While these failures were largely due to improper implementation, or blind faith that the AI response was correct, they highlight a key concern: AI is not yet mature enough for universal adoption across all industries.

The biggest concern with AI today is that it risks overpromising and under-delivering. Businesses investing heavily in AI may find that the reality does not match the expectations, leading to frustration and disillusionment. Additionally, there is an opportunity cost—if businesses overinvest in AI at the expense of other innovations, they may miss out on more effective solutions to their problems.

Should We Trust the Hype? – No. History tells us to be sceptical of technological hype cycles. While AI has enormous potential, it must prove that it can deliver consistent, practical value across industries before we can declare it a truly transformative force.

For now, cautious optimism—rather than blind faith—is the best approach.